Use it for setting up small dimension tables or tiny amounts of data for experimenting with SQL syntax, or with HBase tables. Do not use it for large ETL jobs or benchmark tests for load operations. If more than one inserted row has the same value for the HBase key column, only the last inserted row with that value is visible to Impala queries. VALUES statements to effectively update rows one at a time, by inserting new rows with the same key values as existing rows. SELECT operation copying from an HDFS table, the HBase table might contain fewer rows than were inserted, if the key column in the source table contained duplicate values.

Data definition language statements let you create and modify BigQuery resources usingstandard SQLquery syntax. You can use DDL commands to create, alter, and delete resources, such as tables,table clones,table snapshots,views,user-defined functions , androw-level access policies. The following example creates the procedure SelectFromTablesAndAppend, which takes target_date as an input argument and returns rows_added as an output. The procedure creates a temporary table DataForTargetDate from a query; then, it calculates the number of rows in DataForTargetDate and assigns the result to rows_added.

Next, it inserts a new row into TargetTable, passing the value of target_date as one of the column names. Finally, it drops the tableDataForTargetDate and returns rows_added. From the source table, you can specify the condition according to which rows should be selected in the "condition" parameter of this clause. If this is not present in the query, all of the columns' rows are inserted into the destination table. Daemon runs under, typically the impala user, must have read permission for the files in the source directory of an INSERT ... SELECToperation, and write permission for all affected directories in the destination table.

An INSERT OVERWRITE operation does not require write permission on the original data files in the table, only on the table directories themselves. The USING clause specifies a query that names the source table and specifies the data that COPY copies to the destination table. You can use any form of the SQL SELECT command to select the data that the COPY command copies. The default project of the references is fixed and does not depend on the future queries that invoke the new materialized view.

Otherwise, all references in query_expression must be qualified with project names. By default, the new table inherits partitioning, clustering, and options metadata from the source table. You can customize metadata in the new table by using the optional clauses in the SQL statement. For example, if you want to specify a different set of options for the new table, then include the OPTIONSclause with a list of options and values. A useful technique within PostgreSQL is to use the COPY command to insert values directly into tables from external files. Files used for input by COPY must either be in standard ASCII text format, whose fields are delimited by a uniform symbol, or in PostgreSQL's binary table format.

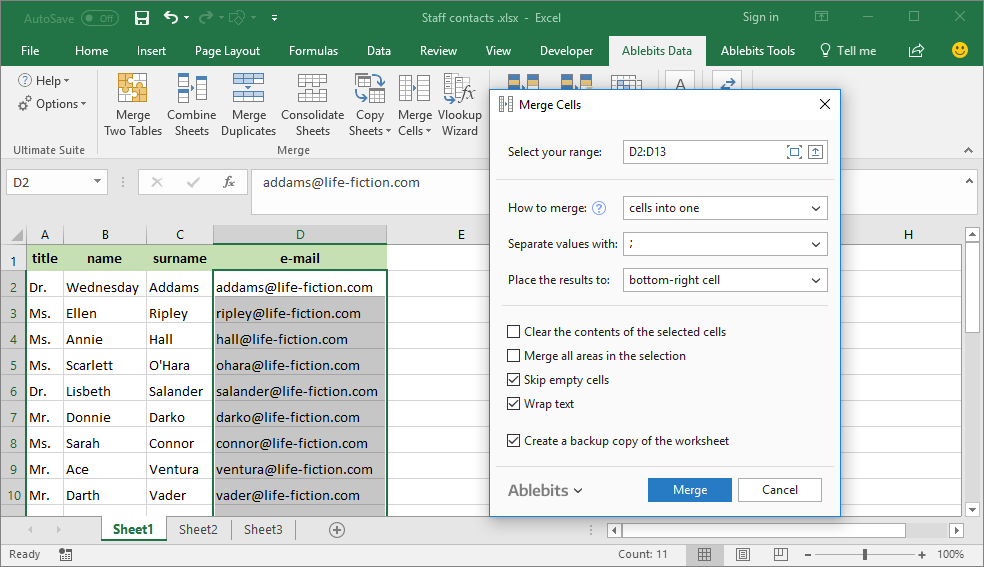

Common delimiters for ASCII files are tabs and commas. When using an ASCII formatted input file with COPY, each line within the file will be treated as a row of data to be inserted and each delimited field will be treated as a column value. In this article, you will learn how we can copy data from one table to another table.

These tables could be in the same database or different databases. The MySQL copy table process can copy a specific dataset or all data from the source table to the destination table. We can use the MySQL copy table process to replicate the issues that occurred on production servers, which helps developers rectify the issues quickly. This is how most relational database systems will order columns in the result set when running a query that uses an asterisk in place of individual column names. Following the VALUES clause must be of the same data type as the column it is being inserted into.

If the optional column-target expression is omitted, PostgreSQL will expect there to be one value for each column in the literal order of the table's structure. If there are fewer values to be inserted than columns, PostgreSQL will attempt to insert a default value for each omitted value. Note that the difference between the ROWS_PARSED and ROWS_LOADED column values represents the number of rows that include detected errors.

However, each of these rows could include multiple errors. To view all errors in the data files, use the VALIDATION_MODE parameter or query the VALIDATE function. By default, the copied columns have the same names in the destination table that they have in the source table.

If you want to give new names to the columns in the destination table, enter the new names in parentheses after the destination table name. If you enter any column names, you must enter a name for every column you are copying. The duplicate values in the park column — three occurrences of Prospect Park and two of Central Park — appear in this result set, even though the query included the DISTINCT keyword. Although individual columns in a result set may contain duplicate values, an entire row must be an exact duplicate of another for it to be removed by DISTINCT. In this case, every value in the name column is unique so DISTINCT doesn't remove any rows when that column is specified in the SELECT clause. Every SQL query begins with a SELECT clause, leading some to refer to queries generally as SELECT statements.

After the SELECT keyword comes a list of whatever columns you want returned in the result set. These columns are drawn from the table specified in the FROM clause. On its own, a query will not change any existing data held in a table. It will only return the information about the data which the author of the query explicitly requests. The information returned by a given query is referred to as its result set. Result sets typically consist of one or more columns from a specified table, and each column returned in a result set can hold one or more rows of information.

If you want to copy existing rows of source tables into a new destination table, first you need to create a destination table like source table. If you specify columns, the number of columns must equal the number of columns selected by the query. If you do not specify any columns, the copied columns will have the same names in the destination table as they had in the source if COPY creates destination_table. The number of columns in the column name list must match the number of columns in the underlying SQL query. If the columns in the table of the underlying SQL query is added or dropped, the view becomes invalid and must be recreated. For example, if the age column is dropped from the mydataset.people table, then the view created in the previous example becomes invalid.

The following example creates a table named newtable in mydataset. The NOT NULL modifier in the column definition list of a CREATE TABLE statement specifies that a column or field is created in REQUIRED mode. The preceding example demonstrates the insertion of two rows from the table book_queue into the books table by way of a SELECT statement that is passed to the INSERT INTO command. Any valid SELECT statement may be used in this context.

In this case, the query selects the result of a function called nextval() from a sequence called book_ids, followed by the title, author_id and subject_id columns from the book_queue table. Notice that the optional column target list is specified identically to the physical structure of the table, from left to right. There can be multiple reasons to create a table from another table. One of them can be to simply merge the columns from multiple tables to create a larger schema.

This is generally observed when a report or analysis needs to be created. In other cases, we pick specific columns from tables and create a new one. Sometimes, we do some mathematical calculations to like averaging, summation and put them in a new schema. To achieve all this, we can use a DAX or Data Analysis Expression which comes bundled with the Power BI software.

Using formulas and expressions gives you the power to manipulate the data columns in a powerful and effective way. [,…] Invalid for external tables, specifies columns from the SELECTlist on which to sort the superprojection that is automatically created for this table. The ORDER BY clause cannot include qualifiers ASCor DESC. Suppose you want to create a table that has a list of movies whose rating is NC-17. In this example, the source table is movies, and the destination table is tbl_movies_Rating_NC17.

To filter the data, we are using the WHERE clause on the rating column. The COPY operation verifies that at least one column in the target table matches a column represented in the data files. If a match is found, the values in the data files are loaded into the column or columns. If no match is found, a set of NULL values for each record in the files is loaded into the table. Defines the encoding format for binary string values in the data files. The option can be used when loading data into binary columns in a table.

By reading this guide, you learned how to write basic queries, as well as filter and sort query result sets. While the commands shown here should work on most relational databases, be aware that every SQL database uses its own unique implementation of the language. You should consult your DBMS's official documentation for a more complete description of each command and their full sets of options. The INSERT INTO SELECT statement selects data from one table and inserts it into an existing table. Previously when we've combined tables we have pulled some columns from one and some columns from another and combined the results into the same rows.

A UNION stacks all the resulting rows from one table on top of all the resulting rows from another table. The columns used must have the same names and data types in order for UNION to work. With the INSERT OVERWRITE TABLE syntax, each new set of inserted rows replaces any existing data in the table.

This is how you load data to query in a data warehousing scenario where you analyze just the data for a particular day, quarter, and so on, discarding the previous data each time. You might keep the entire set of data in one raw table, and transfer and transform certain rows into a more compact and efficient form to perform intensive analysis on that subset. Where clause in the source table statement needs to be included to copy data from the source table to the destination table. The following is the statement used in MySQL to copy a part of the data from the source table to the destination table. To better manage this we can alias table and column names to shorten our query. We can also use aliasing to give more context about the query results.

The only thing it can't do is transformations, so you would need to add other PowerShell commands to achieve this, which can get cumbersome. The following example creates an external table from a CSV file and explicitly specifies the schema. It also specifies the field delimeter ('|') and sets the maximum number of bad records allowed. To create an externally partitioned table, use the WITH PARTITION COLUMNSclause to specify the partition schema details. BigQuery validates the column definitions against the external data location. The schema declaration must strictly follow the ordering of the fields in the external path.

For more information about external partitioning, seeQuerying externally partitioned data. The option list allows you to set materialized view options such as a whether refresh is enabled. You can include multiple options using a comma-separated list.

Value may be passed to the DELIMITERSclause, which defines the character which delimits columns on a single line in the filename. If omitted, PostgreSQL will assume that the ASCII file is tab-delimited. The optional WITH NULL clause allows you to specify in what form to expect NULL values.

In addition, we added a more condition in the WHERE clause of the SELECT statement to retrieve only sales data in 2017. This creates an InnoDB table with three columns, a, b, and c. The ENGINE option is part of the CREATE TABLEstatement, and should not be used following the SELECT; this would result in a syntax error. The same is true for other CREATE TABLE options such as CHARSET. Now run INSERT INTO.. SELECT statement to insert the data from source table to destination table. In this example, we will see how we can copy the data from the source table to the destination table in another database.

To demonstrate, I have created a database named DEV_SakilaDB, and we will copy the data from the actor table of the sakila database to the tblActor table of the DEV_SakilaDB database. Specifies an existing named file format to use for loading data into the table. The named file format determines the format type (CSV, JSON, etc.), as well as any other format options, for the data files.

Following the WHERE keyword in this example syntax is a search condition, which is what actually determines which rows get filtered out from the result set. A search condition is a set of one or more predicates, or expressions that can evaluate one or more value expressions. In SQL, a value expression — also sometimes referred to as a scalar expression — is any expression that will return a single value.

A value expression can be a literal value , a mathematical expression, or a column name. The VALUES clause lets you insert one or more rows by specifying constant values for all the columns. The number, types, and order of the expressions must match the table definition. The order of columns in the column permutation can be different than in the underlying table, and the columns of each input row are reordered to match. If the number of columns in the column permutation is less than in the destination table, all unmentioned columns are set to NULL. When you perform a JOIN between two tables, PostgreSQL creates a virtual table that contains data from both the original table and the table identified by the JOIN.

It's convenient to think of this virtual table as containing all columns from the records in the FROM table as well as all columns from the JOIN table. Before PostgreSQL displays the results, it picks out just the columns that you've mentioned in the SELECT statement. Also, this option allows you to transfer data to a server that doesn't have a network connection , or restore the data in multiple servers at once, but can't be done across DBMS's. Then you need to create the destination database in server-B as described earlier.

Then I ran this script on server-B in the new database I just created. The next step for testing is to create the destination database. To create the database usingSQL Server Management Studio , right-click on "Databases" and select "New Database…". I left the default options, but you can create it with 15 GB of initial size and 1 GB of growth. I also left the recovery model as "simple" to avoid a log file growth.

SQL How to copy text from one table to another , Sql copy data from one column to another in different table. There is simple query to update record from one to another column in MySql. Most of the time you just need to copy only particular record from one table to another but sometime you need to copy the whole column values to another in same table. After listing the three system variables and their values, COPY tells you if a table was dropped, created, or updated during the copy. Then COPY lists the number of rows selected, inserted, and committed.